Can you say if a picture of a person is real or not ? Maybe for the time but in a few years it will be impossible for you to differentiate fake from real photos. Today I will show you how I trained an AI to generate pictures of celebrities. I used the library Pytorch and their tutorials to make this Deep leaning project. I am saying ‘generating celebrities’ because my training test is a dataset called Celeb A in which you find 200 000 labeled pictures (64x64x3) of celebrities.

DCGANs

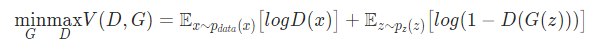

We will not got deeply into the details but the architecture is based on DCGANs (deep convolutional generative adversarial network) from the paper ‘ Unsupervised Representation Learning With Deep Convolutional Generative Adversarial Networks‘ . It consist in a Discriminator who return the probability that an image comes from the training data and a Generator who use a vector based on the normal distribution to generate images. The Discriminator and the Generator will fight (from adversarial) each other to maximize (Discriminator) or minimize(Generator) the loss function :

Result

Sure many of them are really unrealistic but there are some which look really nice.

Training

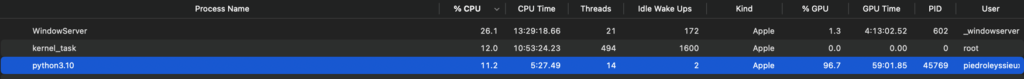

It took my 7 long hours to properly train the AI throw each epochs and it was using 97% of my GPU. I saw that after 4-5 hours the images weren’t really improving. The algorithm need to be improved for better results. I have saved the weights of my long training in a .pth file. If you want to check this file for any reasons don’t hesitate to write me an e-mail (pierreleyssieux4@gmail.com).

The Code

Most of the code is from the Pytorch website. I trained the algorithm on my own and added a few changes to obtain the final result. Here is all the code, most of it is commented so the training isn’t happening each time you execute the file but only the image generation bases on the weight file.

Enable desktop notifications for Gmail.

OK No thanks

1 of 152

(no subject)

Inbox

Pierre.L <pierreleyssieux4@gmail.com>

Attachments

5:45 PM (0 minutes ago)

to me

One attachment

• Scanned by Gmail

from __future__ import print_function

#%matplotlib inline

import argparse

import os

import random

import torch

import torch.nn as nn

import torch.nn.parallel

import torch.backends.cudnn as cudnn

import torch.optim as optim

import torch.utils.data

import torchvision.datasets as dset

import torchvision.transforms as transforms

import torchvision.utils as vutils

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.animation as animation

import time

from tkinter import *

#from IPython.display import HTML

t1_start = time.perf_counter()

# Set random seed for reproducibility

# manualSeed = 999

#manualSeed = random.randint(1, 10000) # use if you want new results

# random.seed(manualSeed)

# torch.manual_seed(manualSeed)

dataroot = "data/celeba"

#nbr coeurs

workers = 2

batch_size = 128

image_size = 64

nc = 3

#vecteur initiale

nz = 100

#taille feature map gen

ngf = 64

#taille feature map disc

ndf = 64

#num epoch

num_epochs = 12

# Learning rate optimizers

lr = 0.0002

#meilleur hyperparam adam

beta1 = 0.5

#nbr cpu

ngpu = 1

#Crée le dataset

if __name__ == '__main__':

# dataset = dset.ImageFolder(root=dataroot,

# transform=transforms.Compose([

# transforms.Resize(image_size),

# transforms.CenterCrop(image_size),

# transforms.ToTensor(),

# transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)),

# ]))

# # Cree le dataloader

# dataloader = torch.utils.data.DataLoader(dataset, batch_size=batch_size,

# shuffle=True, num_workers=workers)

# # Decider le device

device = "cpu" # torch.device("mps" if torch.backends.mps.is_available() else "cpu")

# Plot some training images

# real_batch = next(iter(dataloader))

# plt.figure(figsize=(8,8))

# plt.axis("off")

# plt.title("Training Images")

# plt.imshow(np.transpose(vutils.make_grid(real_batch[0].to(device)[:64], padding=2, normalize=True).cpu(),(1,2,0)))

# plt.show()

# custom weights initialization called on ``netG`` and ``netD``

def weights_init(m):

classname = m.__class__.__name__

if classname.find('Conv') != -1:

nn.init.normal_(m.weight.data, 0.0, 0.02)

elif classname.find('BatchNorm') != -1:

nn.init.normal_(m.weight.data, 1.0, 0.02)

nn.init.constant_(m.bias.data, 0)

# Generator Code

class Generator(nn.Module):

def __init__(self, ngpu):

super(Generator, self).__init__()

self.ngpu = ngpu

self.main = nn.Sequential(

# input is Z, going into a convolution

nn.ConvTranspose2d( nz, ngf * 8, 4, 1, 0, bias=False),

nn.BatchNorm2d(ngf * 8),

nn.ReLU(True),

# state size. ``(ngf*8) x 4 x 4``

nn.ConvTranspose2d(ngf * 8, ngf * 4, 4, 2, 1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(True),

# state size. ``(ngf*4) x 8 x 8``

nn.ConvTranspose2d( ngf * 4, ngf * 2, 4, 2, 1, bias=False),

nn.BatchNorm2d(ngf * 2),

nn.ReLU(True),

# state size. ``(ngf*2) x 16 x 16``

nn.ConvTranspose2d( ngf * 2, ngf, 4, 2, 1, bias=False),

nn.BatchNorm2d(ngf),

nn.ReLU(True),

# state size. ``(ngf) x 32 x 32``

nn.ConvTranspose2d( ngf, nc, 4, 2, 1, bias=False),

nn.Tanh()

# state size. ``(nc) x 64 x 64``

)

def forward(self, input):

return self.main(input)

# Dicrimanator Code

class Discriminator(nn.Module):

def __init__(self, ngpu):

super(Discriminator, self).__init__()

self.ngpu = ngpu

self.main = nn.Sequential(

# input is ``(nc) x 64 x 64``

nn.Conv2d(nc, ndf, 4, 2, 1, bias=False),

nn.LeakyReLU(0.2, inplace=True),

# state size. ``(ndf) x 32 x 32``

nn.Conv2d(ndf, ndf * 2, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 2),

nn.LeakyReLU(0.2, inplace=True),

# state size. ``(ndf*2) x 16 x 16``

nn.Conv2d(ndf * 2, ndf * 4, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 4),

nn.LeakyReLU(0.2, inplace=True),

# state size. ``(ndf*4) x 8 x 8``

nn.Conv2d(ndf * 4, ndf * 8, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 8),

nn.LeakyReLU(0.2, inplace=True),

# state size. ``(ndf*8) x 4 x 4``

nn.Conv2d(ndf * 8, 1, 4, 1, 0, bias=False),

nn.Sigmoid()

)

def forward(self, input):

return self.main(input)

# Cree le Gen

netG = Generator(ngpu).to(device)

# Si plusieurs GPU Gen

if (device == 'cpu') and (ngpu > 1):

netG = nn.DataParallel(netG, list(range(ngpu)))

# Apply the ``weights_init`` function to randomly initialize all weights

# to ``mean=0``, ``stdev=0.02``.

netG.apply(weights_init)

# Cree the Discriminator

netD = Discriminator(ngpu).to(device)

# Si plusiur gpu disc

if (device == 'cpu') and (ngpu > 1): #if (device.type == 'mps') and (ngpu > 1):

netD = nn.DataParallel(netD, list(range(ngpu)))

# Apply the ``weights_init`` function to randomly initialize all weights

# like this: ``to mean=0, stdev=0.2``.

netD.apply(weights_init)

# Print the model

#print(netD)

#Loss focntion ici BCELoss

criterion = nn.BCELoss()

# cree le vecteur de bruit

fixed_noise = torch.randn(64, nz, 1, 1, device=device)

# Cree des labels

real_label = 1.

fake_label = 0.

# Utilsier Adam Optimizer pour le Gen et le Disc

optimizerD = optim.Adam(netD.parameters(), lr=lr, betas=(beta1, 0.999))

optimizerG = optim.Adam(netG.parameters(), lr=lr, betas=(beta1, 0.999))

# Training Loop

# garder trace de l'avancement

img_list = []

G_losses = []

D_losses = []

iters = 0

# print("Starting Training Loop...")

# For each epoch

# for epoch in range(num_epochs):

# # For each batch in the dataloader

# for i, data in enumerate(dataloader, 0):

# ############################

# # (1) Update D network: maximize log(D(x)) + log(1 - D(G(z)))

# ###########################

# ## Train with all-real batch

# netD.zero_grad()

# # Format batch

# real_cpu = data[0].to(device)

# b_size = real_cpu.size(0)

# label = torch.full((b_size,), real_label, dtype=torch.float, device=device)

# # Forward pass real batch through D

# output = netD(real_cpu).view(-1)

# # Calculate loss on all-real batch

# errD_real = criterion(output, label)

# # Calculate gradients for D in backward pass

# errD_real.backward()

# D_x = output.mean().item()

# ## Train with all-fake batch

# # Generate batch of latent vectors

# noise = torch.randn(b_size, nz, 1, 1, device=device)

# # Generate fake image batch with G

# fake = netG(noise)

# label.fill_(fake_label)

# # Classify all fake batch with D

# output = netD(fake.detach()).view(-1)

# # Calculate D's loss on the all-fake batch

# errD_fake = criterion(output, label)

# # Calculate the gradients for this batch, accumulated (summed) with previous gradients

# errD_fake.backward()

# D_G_z1 = output.mean().item()

# # Compute error of D as sum over the fake and the real batches

# errD = errD_real + errD_fake

# # Update D

# optimizerD.step()

# ############################

# # (2) Update G network: maximize log(D(G(z)))

# ###########################

# netG.zero_grad()

# label.fill_(real_label) # fake labels are real for generator cost

# # Since we just updated D, perform another forward pass of all-fake batch through D

# output = netD(fake).view(-1)

# # Calculate G's loss based on this output

# errG = criterion(output, label)

# # Calculate gradients for G

# errG.backward()

# D_G_z2 = output.mean().item()

# # Update G

# optimizerG.step()

# # Output training stats

# if i % 50 == 0:

# print('[%d/%d][%d/%d]\tLoss_D: %.4f\tLoss_G: %.4f\tD(x): %.4f\tD(G(z)): %.4f / %.4f'

# % (epoch, num_epochs, i, len(dataloader),

# errD.item(), errG.item(), D_x, D_G_z1, D_G_z2))

# print("device:", device)

# # Save Losses for plotting later

# G_losses.append(errG.item())

# D_losses.append(errD.item())

# # Check how the generator is doing by saving G's output on fixed_noise

# if (iters % 500 == 0) or ((epoch == num_epochs-1) and (i == len(dataloader)-1)):

# with torch.no_grad():

# fake = netG(fixed_noise).detach().cpu()

# img_list.append(vutils.make_grid(fake, padding=2, normalize=True))

# iters += 1

# torch.save({

# 'generator_state_dict': netG.state_dict(),

# 'discriminator_state_dict': netD.state_dict(),

# }, 'gan_weights.pth')

# t1_stop = time.perf_counter()

# print("time:", (t1_stop-t1_start), "s")

# fig = plt.figure()

# ims = []

# for i in range(len(img_list)):

# img_np = img_list[i].to("cpu").numpy() # Convert the tensor to a NumPy array

# img_np = np.transpose(img_np, (1, 2, 0)) # Transpose the dimensions to (height, width, channels)

# img_np = (img_np * 255).astype(np.uint8) # Convert the float values to uint8

# im = plt.imshow(img_np, animated=True)

# ims.append([im])

# plt.imsave(f"image{i}.jpg", img_np)

# ani = animation.ArtistAnimation(fig, ims, interval=700, blit=True, repeat_delay=1000)

# plt.show()

PATH = "gan_weights_3_(12epochs).pth"

# print(torch.load(PATH)['generator_state_dict'])

model = Generator(ngpu=ngpu)

model.load_state_dict(torch.load(PATH)['generator_state_dict'])

# Genere du bruit

noise = torch.randn(batch_size, nz, 1, 1, device=device)

#print(noise.device)

fake_images = model(noise)

# Visualiser les images

plt.figure(figsize=(8,8))

plt.axis("off")

plt.title("Generated Images")

plt.imshow(np.transpose(vutils.make_grid(fake_images.to("mps")[:32], padding=2, normalize=True).cpu(),(1,2,0)))

plt.show()

No responses yet